Many of us have long had a dream in which personal cloud (née grid) computing is as easy as, and works with the same programming setup, as desktop computing. Various approximations of that capability exist now but largely depend on institutional or corporate systems, dedicated system administrators, and in many other ways sacrifice the essentially independent nature of personal computers. This year though it is clear we’re getting very close to personal cloud computing without compromise. The first big sign was the splashy arrival of Docker which gives us freedom inside a Linux container and manageability across deployment platforms. The second shoe to drop seems to be Google’s Kubernetes which cloud computing providers of all stripes have adopted for orchestrating Docker containers. Proposals shown at Docker Global Hack Day #2 suggest that we can expect such things to become easily accessible by being integrated into Docker itself as well.

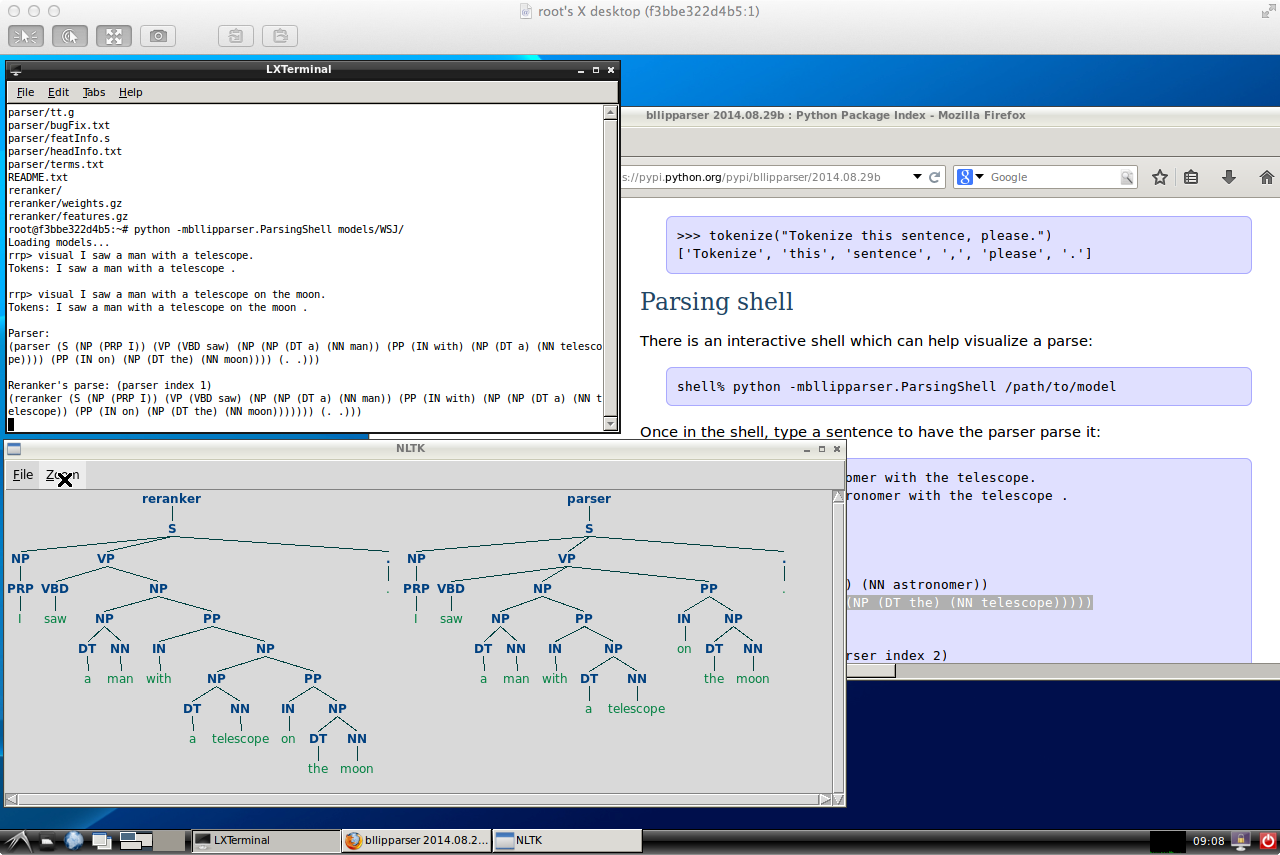

My current programming work involves computational linguistics machine learning experiments, which most often means running HTCondor DAGman workflows in order to provide concurrency for complex compute jobs. Naturally what I would like to have is a way to develop such programs that is seamless from my desktop (for code, test, and small jobs) to the cloud. HTCondor works fine on desktops, grids, and clouds but the installation, configuration, and administration for each of those environments is different, fiddly, and often time consuming. Therefore I’ve taken a stab at packaging up HTCondor in a Docker container and configuring it for Kubernetes to run on Google Compute Engine. The current setup is mostly a proof-of-concept at this stage but I wanted to share the details so other who are interested can give it a whirl.

Prerequisites

Getting all this stuff set up belies the ease-of-use I have in mind which is that it will eventually be no more involved than installing Docker and signing up for a Google Cloud account, but such is life on the bleeding edge.

- Install Docker. Works for Mac OS X, Windows, and Linux (easy click-to-install for the first two). Instructions here.

- Install the Google Cloud SDK. Instructions here. If you haven’t used GCE before then you should go through the Quickstart to get your credentials set up and installation checked out. Be sure to set your default project id with

gcloud config set project <your-project-id>. - Create a project on Google Cloud using the Developer Console. You’ll need to have an account with billing enabled. There are free credit deals for new users. Enable the cloud and compute engine APIs (a few of these aren’t strictly needed but do no harm here):

- Google Cloud Datastore API

- Google Cloud Deployment Manager API

- Google Cloud DNS API

- Google Cloud Monitoring API

- Google Cloud Storage

- Google Cloud Storage JSON API

- Google Compute Engine

- I’ve had trouble with the quota setting for this. You should get 24 CPUs per region by default, but apparently enabling some other API can change that to 8 or 2. The solution I found is to disable GCE then reenable it. The most repeatable results seem to come from enabling the other APIs then enable GCE by clicking on the “VM instances” item in the Compute Engine menu (which may require a browser page reload).

- Update: This quota resetting behavior is due to having an account set to “Free Trial” mode. Upgrade to regular pay and it will stop. Although it doesn’t say so anyplace I could find, it seems that ending the “Free Trial” period does not erase free credits you may have received when signing up.

- Google Compute Engine Autoscaler API

- Google Compute Engine Instance Group Manager API

- Google Compute Engine Instance Groups API

- Google Container Engine API

- Install Kubernetes from source. I recommend against using a binary distribution since the one I used recently didn’t work properly. Instructions here. The script you want is

build/release.sh. Set an environment variableKUBE_HOMEto the directory where you’ve installed Kubernetes for use in the next steps.

Git the Condor-Kubernetes Files

The Dockerfile and Kubernetes configuration files are on GitHub at https://github.com/jimwhite/condor-kubernetes.

1 2 | |

Turn up the Condor-Kubernetes Cluster

There is a script start-condor-kubernetes.sh that does all these steps at once, but I recommend doing them one-at-a-time so you can see whether they succeed or not. I’ve seen occassions where the cAdvisor monitoring service doesn’t start properly, but you can ignore that if you don’t need to use it (and it can be loaded separately from the instruction in $KUBE_HOME/examples/monitoring). The default settings in $KUBE_HOME/cluster/gce/config-default.sh are for NUM_MINIONS=4 + 1 master n1-standard-1 size instances in zone us-central1-b.

1

| |

There is a trusted build for the Dockerfile I use for HTCondor in the Docker Hub Registry at https://registry.hub.docker.com/u/jimwhite/condor-kubernetes/. To use a different repository you would need to modify the image setting in the condor-manager.json and condor-executor-controller.json files.

Spin up the Condor manager pod. It is currently configured by the userfiles/start-condor.sh script to be a manager, execute, and submit host. The execute option is pretty much just for testing though. The way the script determines whether it is master or not is by looking for the CONDORMANAGER_SERVICE_HOST variable configured by Kubernetes. Using the Docker-style variables would be the way to go if this container scheme for Condor were used with Docker container linking too.

1

| |

Start the Condor manager service. The executor pods will be routed to the manager via this service by using the CONDORMANAGER_SERVICE_HOST (and ..._PORT) environment variables.

1

| |

Start the executor pod replica controller. The executors currently have submit enabled too, but for applications that don’t need that capability it can be omitted to save on network connections (whose open file description space on the collector can be a problem for larger clusters).

1

| |

You should see 3 pods for Condor (one manager and two executors), initially in ‘Pending’ state and then ‘Running’ after a few minutes.

1

| |

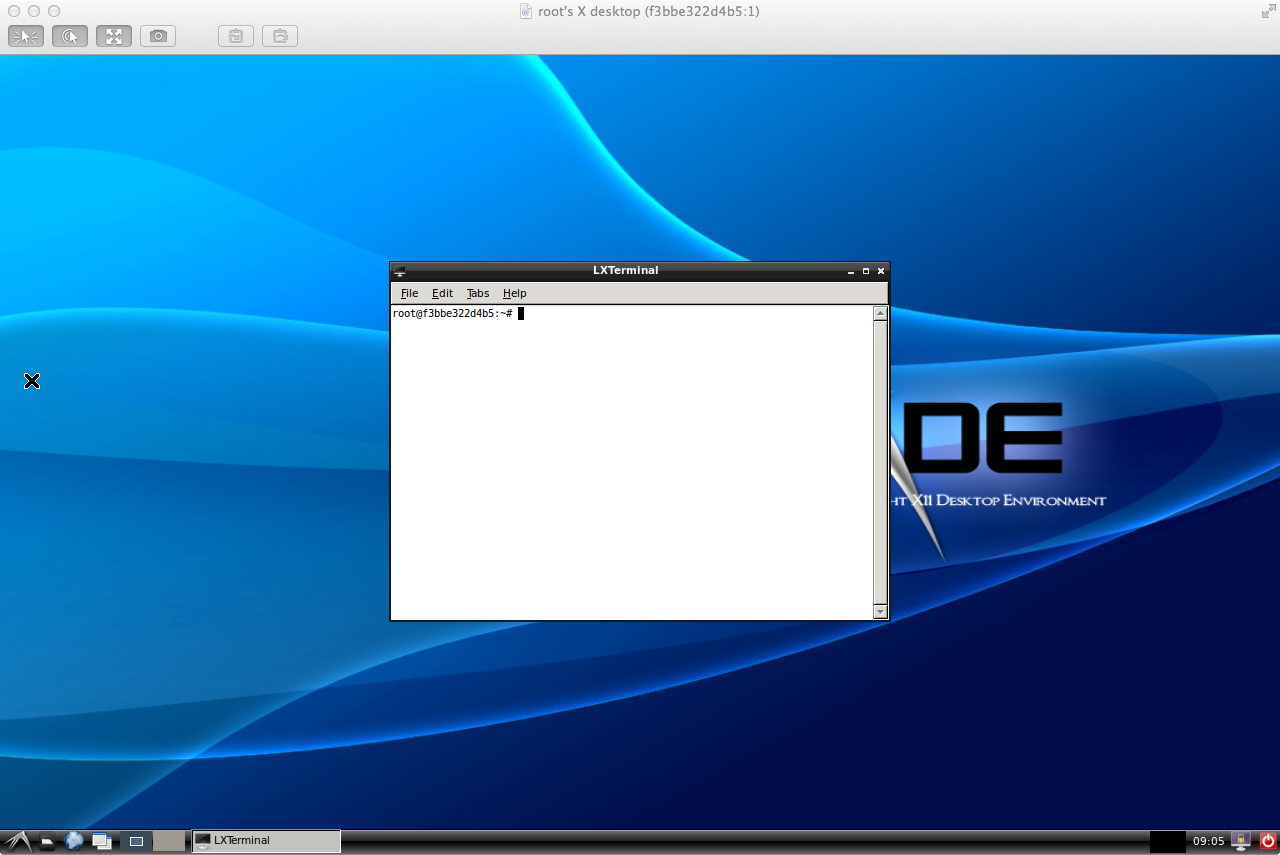

SSH to a Minion

Find the id of a minion that is running Condor from the list of pods. You can then ssh to it thusly:

1

| |

Then use docker ps to find the id of the container that is running Condor and use docker exec to run a shell in it:

1

| |

And condor_status should show nodes for the manager and the two executor pods:

1 2 3 4 5 6 7 8 9 10 11 | |

Changing the Executor Replica Count

The Condor Executor pod replica count is currently set to 2 and can be changed using the resize command (see also the $KUBE_HOME/examples/update-demo to see another example of updating the replica count).

1

| |

If you list the pods then you’ll see some executors are ‘<unsassigned>’ because the number of minions is too low. Auto-scaling for Kubernetes on Google Cloud is currently in the works and is key to making this a generally useful appliance.

Shut Down the Cluster

Be sure to turn the lights off when you’re done…

1

| |

Next Steps

There remain several things to do to make this a truly easy-to-use and seamless research tool. A key reason for using Docker here besides leveraging cloud services is being able to containerize the application being run. While Docker images as Condor job executables is a natural progression (as is Docker volume-based data management), the model we have here is just as good for many purposes since we just bundle Condor and the application together in a single image. The way I plan to use that is to add a submit-only pod which submits the workflow and deals with data management. Of course for bigger data workflows a distributed data management tool such as GlusterFS or HDFS would be used (both of which have already have been Dockerized).